The area of artificial intelligence (AI) has experienced fast growth and increased public interest in the latest years. Applications that seemed from the realm of science fiction are now a reality, such as autonomous cars, face and speech recognition software, and automatic language translation. These applications are certainly useful and we foresee an increasing use of AI in several domains; however, there is an aspect that hinders the adoption of AI systems, which is that many of these technologies act like black boxes.

Acting like a black box means that the mechanisms used and the features learned by many of the AI methods to make predictions are not understandable by humans. For some methods, such as deep neural networks, even AI experts are not able to tell which features are learned by the models to make predictions. For applications in domains were liability is an important issue such as health, law, security, and finance, being able to explain why an AI algorithm made a prediction is critical. Imagine a doctor choosing a treatment for a pacient based on current black blox AI technology, versus an AI model which explains why the decision was made, the features of exams and health records used to make the decision, when and how it could fail and the level of uncertainty of its prediction. These explanations can help people understand, eventually trust it and feel in control to further use.

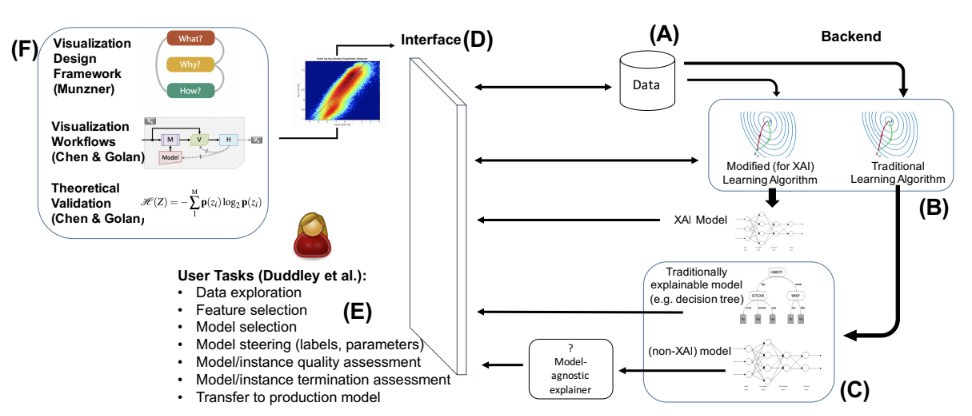

Nowadays, there are early initiatives for developing explainable AI (XAI), an area that attempts to create AI algorithms explainable for humans. The recently launched DARPA XAI initiative is a relevant example, and important conferences are holding related workshops and discussion panels. There has been already some progresses, but most of the research has focused in developing XAI algorithms, neglecting the human side of the explanations, and whether they are usable and practical in real world situations. A recent survey indicates that the current XAI research is not well integrated with knowledge from visualization, cognitive psychology and perception, i.e., how humans perceive, interpret and act upon the explanations. The fields of Information Visualization and Human-Computer Interaction (HCI) have much to offer to bridge this gap. Moreover, the current works in XAI usually do not deploy or evaluate explanations with interactive applications or by conducting studies with real users. In order to evaluate XAI rigorously, we should test on practical applications with real data.

Goals

The present proposal aims to research the problem of how to design effective and efficient interfaces for eXplainable AI systems (XAI). More particularly, the focus of this proposal is to develop and evaluate empirically a novel framework to design and implement interactive visual interfaces for XAI. This framework will not be limited to a specific domain, but we will use health care as use case area. We will collaborate with expert physicians to address problems in two areas: evidencebased health care and hospital patient readmission. We will conduct user studies on this practical and critical domain, and we will develop and release software to allow users of Machine Learning (ML, a subarea of AI) algorithms to obtain visual interactive explanations from models and results of popular supervised, semi-supervised and unsupervised methods. Our main strengths to make this project feasible are two-fold: (i) our current expertise in researching visual interactive interfaces for recommender systems, a particular application area of AI and information retrieval, and (ii) our ongoing collaboration with expert physicians in the problems described above.

Domains of Study

In order to validate our framework with a practical application, we will develop visualizations for XAI and will conduct user studies in the following domains:

- Screening of Biomedical Documents: In collaboration with the Epistemonikos foundation we will study the application of visual XAI for the practice of evidence-based health care, where physicians identify relevant research articles in order to answer clinical questions.

- Medical Records: In collaboration with a large health care hospital network, we will study, develop and evaluate visual XAI interfaces based on multimodal electronic health records. Our general goal here will be to help physicians in predicting and understanding patient readmission, i.e., the cases where after a surgery or treatment the patients return to the hospital.

Expected Results and Impact

Our research will contribute to bridge the gap between the advances in XAI algorithms and the knowledge from Visualization, HCI and cognitive pyschology communities for the goal of developing intelligible and usable intelligent systems. The results of this project should help users, developers and researchers of AI to deal with current problems of black box AI models such model explanation, outcome explanation, model inspection, and transparent design. Our framework will not focus on a specific domain, but due to our use cases in health care, we expect to produce significant contributions in the challenges addressed. We also aim at motivating further research on the crossroads of AI and Visualization for different domains.